putzfimel's neighborhood:

Copy as image

Copied to clipboard!

Copy as image

Copied to clipboard!

Copy as image

Copied to clipboard!

Copy as image

Copied to clipboard!

Copy as image

Copied to clipboard!

Copy as image

Copied to clipboard!

Copy as image

Copied to clipboard!

Copy as image

Copied to clipboard!

Copy as image

Copied to clipboard!

Copy as image

Copied to clipboard!

Copy as image

Copied to clipboard!

Filter

| Filename: FxBlzvVaMAAcevS.jpg | (previous profile or banner image) |

Copy image with current annotations Copied to clipboard!

tldr 99%

| Filename: 1421558057.jpeg | Last seen banner image |

Copy image with current annotations Copied to clipboard!

anime 99%

| Filename: VrQc3WLL.jpeg | Last seen profile image |

Copy image with current annotations Copied to clipboard!

toons other 99%

| Filename: Fg2Q9yFUUAAj-Fm.png | Source tweet: Nov 6, 2022 2:42 AM |

Copy image with current annotations Copied to clipboard!

tldr 45%

| Filename: Fg2Q9yIUYAI4iSR.png | Source tweet: Nov 6, 2022 2:42 AM |

Copy image with current annotations Copied to clipboard!

skull mask 28%

| Filename: Fg2Q9yIVQAExtLk.png | Source tweet: Nov 6, 2022 2:42 AM |

Copy image with current annotations Copied to clipboard!

tldr 80%

| Filename: Fg2Q9zCUYAIIv9X.png | Source tweet: Nov 6, 2022 2:42 AM |

Copy image with current annotations Copied to clipboard!

tldr 64%

| Filename: Fmu4KivaUAIgthc.jpg | Source tweet: Jan 18, 2023 5:50 AM |

Copy image with current annotations Copied to clipboard!

etc 94%

| Filename: FrxSdjsaIAAzva2.png | Source tweet: Mar 21, 2023 8:23 PM |

Copy image with current annotations Copied to clipboard!

hitler 29%

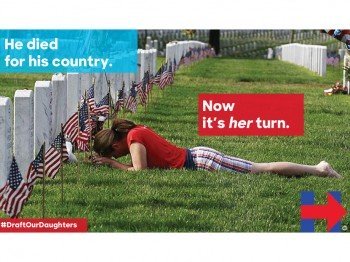

| Filename: Fq__UJXakAITeTo.jpg | Source tweet: Mar 12, 2023 6:38 AM |

Copy image with current annotations Copied to clipboard!

us flag 82%

| Filename: Fq68SHZaUAAWyBg.png | Source tweet: Mar 11, 2023 7:06 AM |

Copy image with current annotations Copied to clipboard!

face 86%

| Filename: Fq6qLemakAAgR92.jpg | Source tweet: Mar 11, 2023 5:47 AM |

Copy image with current annotations Copied to clipboard!

art_other 54%

| Filename: Ft0NclpaUAAwFJh.jpg | Source tweet: Apr 16, 2023 6:31 AM |

Copy image with current annotations Copied to clipboard!

art_other 55%

toons other 40%

| Filename: FvOrvfrakAAm0xC.jpg | Source tweet: May 3, 2023 8:09 PM |

Copy image with current annotations Copied to clipboard!

skull 61%

etc 27%