LennyLampshades' neighborhood:

Copy as image

Copied to clipboard!

Copy as image

Copied to clipboard!

Copy as image

Copied to clipboard!

Copy as image

Copied to clipboard!

Copy as image

Copied to clipboard!

Copy as image

Copied to clipboard!

Copy as image

Copied to clipboard!

Filter

| Filename: PJ5DEUEj.jpg | Last seen profile image |

Copy image with current annotations Copied to clipboard!

critters 99%

| Filename: EYwj86FWAAEV1fZ.jpg | Source tweet: May 24, 2020 5:16 AM |

Copy image with current annotations Copied to clipboard!

art_other 99%

| Filename: 1520373827.jpeg | Last seen banner image |

Copy image with current annotations Copied to clipboard!

etc 61%

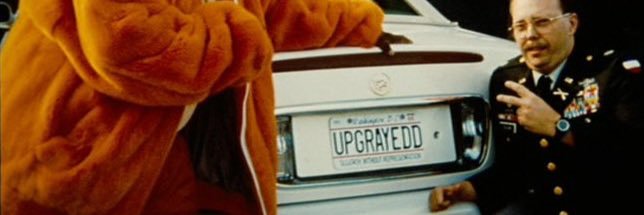

| Filename: FEqG2ctXsAIs3Qq.jpg | Source tweet: Nov 20, 2021 6:42 PM |

Copy image with current annotations Copied to clipboard!

hitler 29%

| Filename: EgEshr7WsAE04co.jpg | Source tweet: Aug 23, 2020 2:56 AM |

Copy image with current annotations Copied to clipboard!

face 84%

| Filename: Ein8W2jWoAEdeTG.jpg | Source tweet: Sep 23, 2020 7:43 PM |

Copy image with current annotations Copied to clipboard!

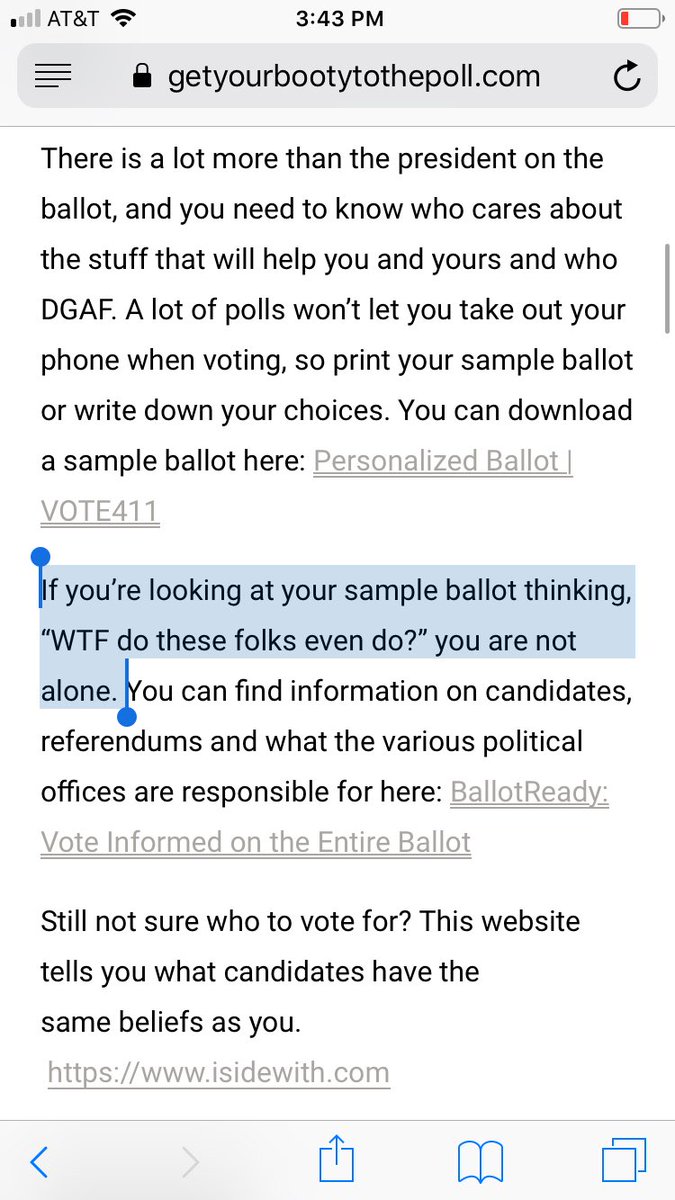

tldr 99%

| Filename: EZIQvBuXQAAUr-y.jpg | Source tweet: May 28, 2020 7:43 PM | |

| Filename: EZW4XLWXsAA05t5.jpg | Source tweet: May 31, 2020 3:51 PM |

Copy image with current annotations Copied to clipboard!

hitler 29%