CulturalDarkne1's neighborhood:

Copy as image

Copied to clipboard!

Copy as image

Copied to clipboard!

Copy as image

Copied to clipboard!

Copy as image

Copied to clipboard!

Copy as image

Copied to clipboard!

Copy as image

Copied to clipboard!

Copy as image

Copied to clipboard!

Copy as image

Copied to clipboard!

Filter

| Filename: FOPnctuXIAAkvwj.jpg | Source tweet: Mar 19, 2022 9:52 PM |

Copy image with current annotations Copied to clipboard!

militarized 58%

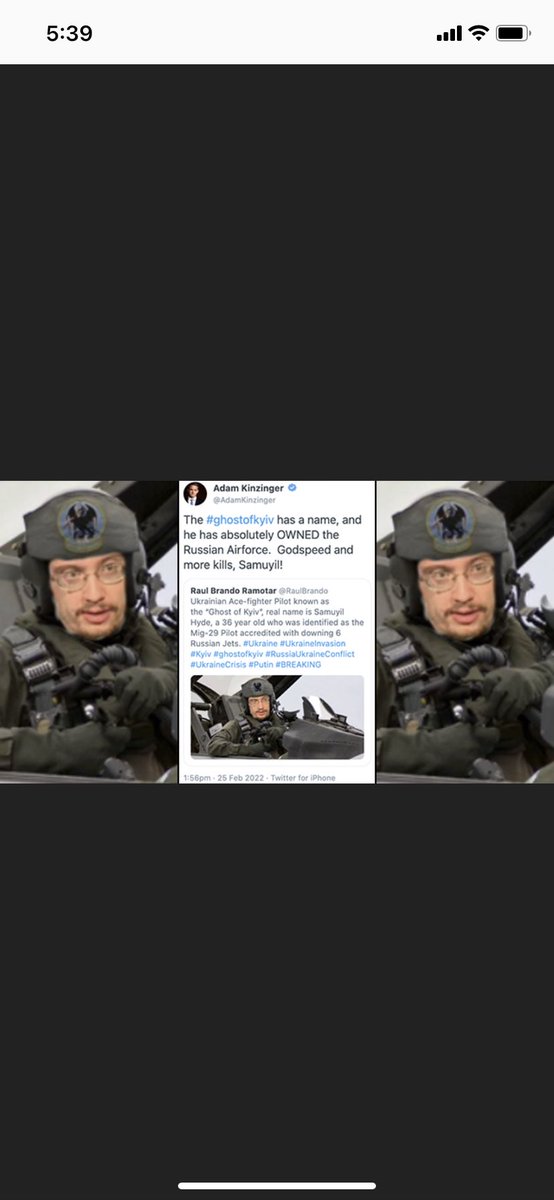

| Filename: FX6aXAzXgAARt0Q.jpg | Source tweet: Jul 18, 2022 1:40 AM |

Copy image with current annotations Copied to clipboard!

anime 53%

mspaint style 35%

| Filename: FhLwgR-XgAAhrom.jpg | Source tweet: Nov 10, 2022 6:51 AM |

Copy image with current annotations Copied to clipboard!

anime 52%

mspaint style 36%

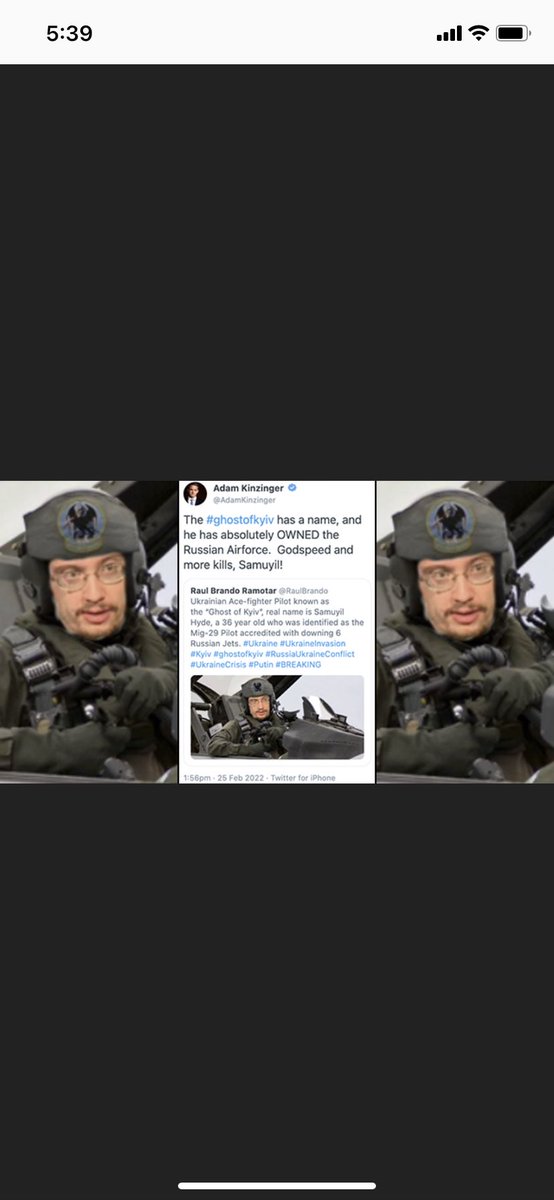

| Filename: FX6ZgjtXwAAkK8N.jpg | Source tweet: Jul 18, 2022 1:36 AM |

Copy image with current annotations Copied to clipboard!

joker 43%

| Filename: HjpxnFIF.jpg | Last seen profile image |

Copy image with current annotations Copied to clipboard!

face 42%

militarized 30%

| Filename: 1568223071.jpeg | Last seen banner image |

Copy image with current annotations Copied to clipboard!

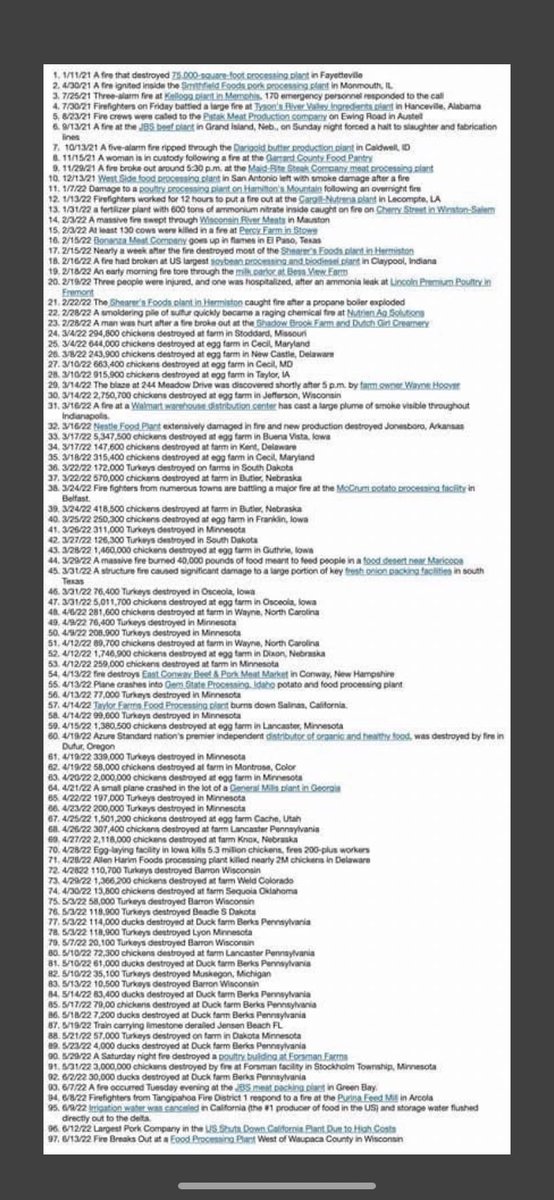

etc 71%

| Filename: FfGOTB-X0AApvQv.jpg | Source tweet: Oct 15, 2022 8:32 AM |

Copy image with current annotations Copied to clipboard!

tldr 100%

| Filename: FfFeWgxXEAAyBp-.jpg | Source tweet: Oct 15, 2022 5:03 AM |

Copy image with current annotations Copied to clipboard!

mspaint style 88%

| Filename: FX5G7h6XoAEC3_N.jpg | Source tweet: Jul 17, 2022 7:35 PM |

Copy image with current annotations Copied to clipboard!

mspaint style 88%

| Filename: FW3jaUYWAAAWIZy.jpg | Source tweet: Jul 5, 2022 2:05 AM |

Copy image with current annotations Copied to clipboard!

mspaint style 99%