VaxSideEffects' neighborhood:

Copy as image

Copied to clipboard!

Copy as image

Copied to clipboard!

Copy as image

Copied to clipboard!

Copy as image

Copied to clipboard!

Copy as image

Copied to clipboard!

Copy as image

Copied to clipboard!

Copy as image

Copied to clipboard!

Copy as image

Copied to clipboard!

Filter

| Filename: Fl1sEfwWYAA690Z.jpg | Source tweet: Jan 7, 2023 3:19 AM |

Copy image with current annotations Copied to clipboard!

stars and bars 94%

| Filename: QTlOUjNu.jpg | Last seen profile image |

Copy image with current annotations Copied to clipboard!

etc 65%

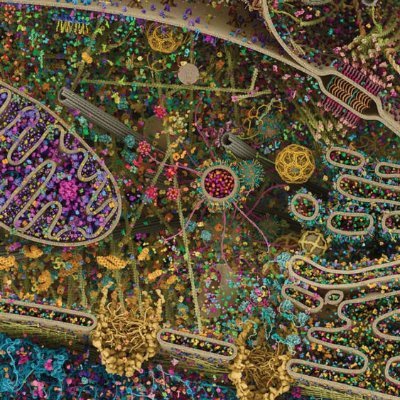

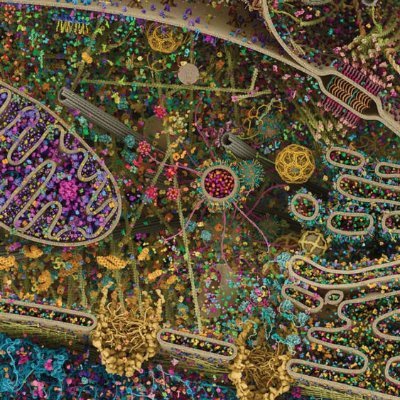

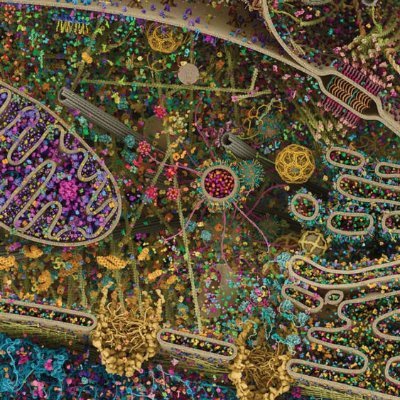

| Filename: FmoAtcMWYAUcYir.jpg | Source tweet: Jan 16, 2023 9:50 PM |

Copy image with current annotations Copied to clipboard!

militarized 64%

art_other 28%

| Filename: Fm31o5pXEAEr8B0.jpg | Source tweet: Jan 19, 2023 11:36 PM |

Copy image with current annotations Copied to clipboard!

militarized 26%

| Filename: FsUIEDAXgAE_0gO.png | Source tweet: Mar 28, 2023 2:45 PM |

Copy image with current annotations Copied to clipboard!

militarized 90%

| Filename: 1672339376.jpeg | Last seen banner image |

Copy image with current annotations Copied to clipboard!

art_other 89%

| Filename: FmpiesgWQAEtUHb.jpg | Source tweet: Jan 17, 2023 4:57 AM |

Copy image with current annotations Copied to clipboard!

art_other 99%

| Filename: FndWodYXwAExYW5.jpg | Source tweet: Jan 27, 2023 6:27 AM |

Copy image with current annotations Copied to clipboard!

tldr 100%

| Filename: FrocROwWcAA2pQ_.jpg | Source tweet: Mar 20, 2023 3:09 AM |

Copy image with current annotations Copied to clipboard!

etc 99%

| Filename: FviWw5RXwAE_4gk.png | (previous profile or banner image) |

Copy image with current annotations Copied to clipboard!

tldr 99%

| Filename: FviVmQTWcAEYRZs.png | (previous profile or banner image) |

Copy image with current annotations Copied to clipboard!

tldr 99%