D4M_EN's neighborhood:

Copy as image

Copied to clipboard!

Copy as image

Copied to clipboard!

Copy as image

Copied to clipboard!

Copy as image

Copied to clipboard!

Copy as image

Copied to clipboard!

Copy as image

Copied to clipboard!

Copy as image

Copied to clipboard!

Copy as image

Copied to clipboard!

Copy as image

Copied to clipboard!

Copy as image

Copied to clipboard!

Copy as image

Copied to clipboard!

Copy as image

Copied to clipboard!

Filter

| Filename: 1619333394.jpeg | Last seen banner image |

Copy image with current annotations Copied to clipboard!

hitler 29%

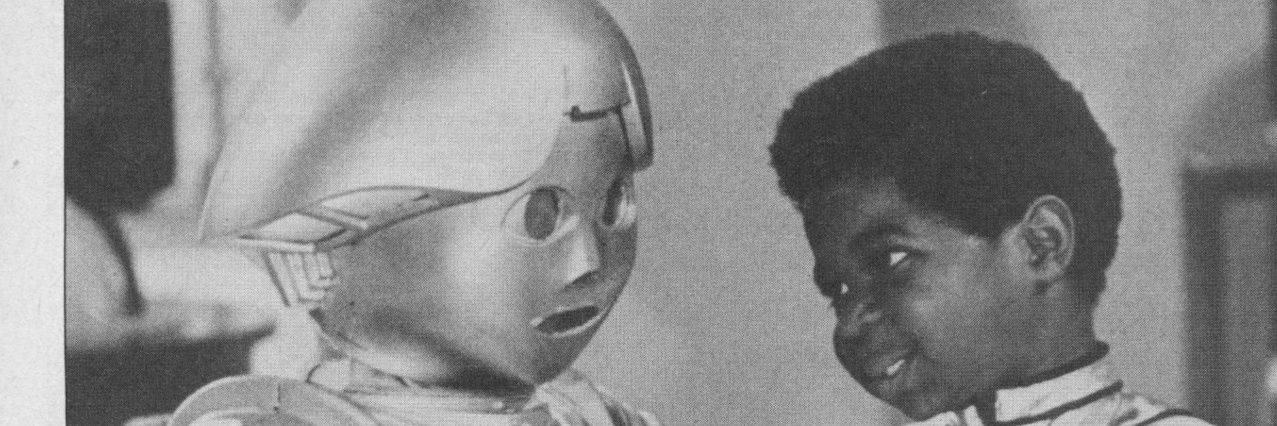

| Filename: Ff9NJsdXoAAILOw.jpg | Source tweet: Oct 26, 2022 12:46 AM | |

| Filename: FfaISSgXkAU62g2.jpg | Source tweet: Oct 19, 2022 5:18 AM |

Copy image with current annotations Copied to clipboard!

mspaint style 48%

pepe 36%

| Filename: FWZ9kcdWAAICJAr.jpg | Source tweet: Jun 29, 2022 8:11 AM |

Copy image with current annotations Copied to clipboard!

art_other 54%

etc 35%

| Filename: dLbzNdbj.jpg | Last seen profile image |

Copy image with current annotations Copied to clipboard!

art_other 82%

| Filename: Fe-Q7vsXkAk3O7K.jpg | Source tweet: Oct 13, 2022 7:27 PM |

Copy image with current annotations Copied to clipboard!

etc 70%

| Filename: FR-tDI3WYAArpUm.jpg | Source tweet: May 5, 2022 8:06 AM |

Copy image with current annotations Copied to clipboard!

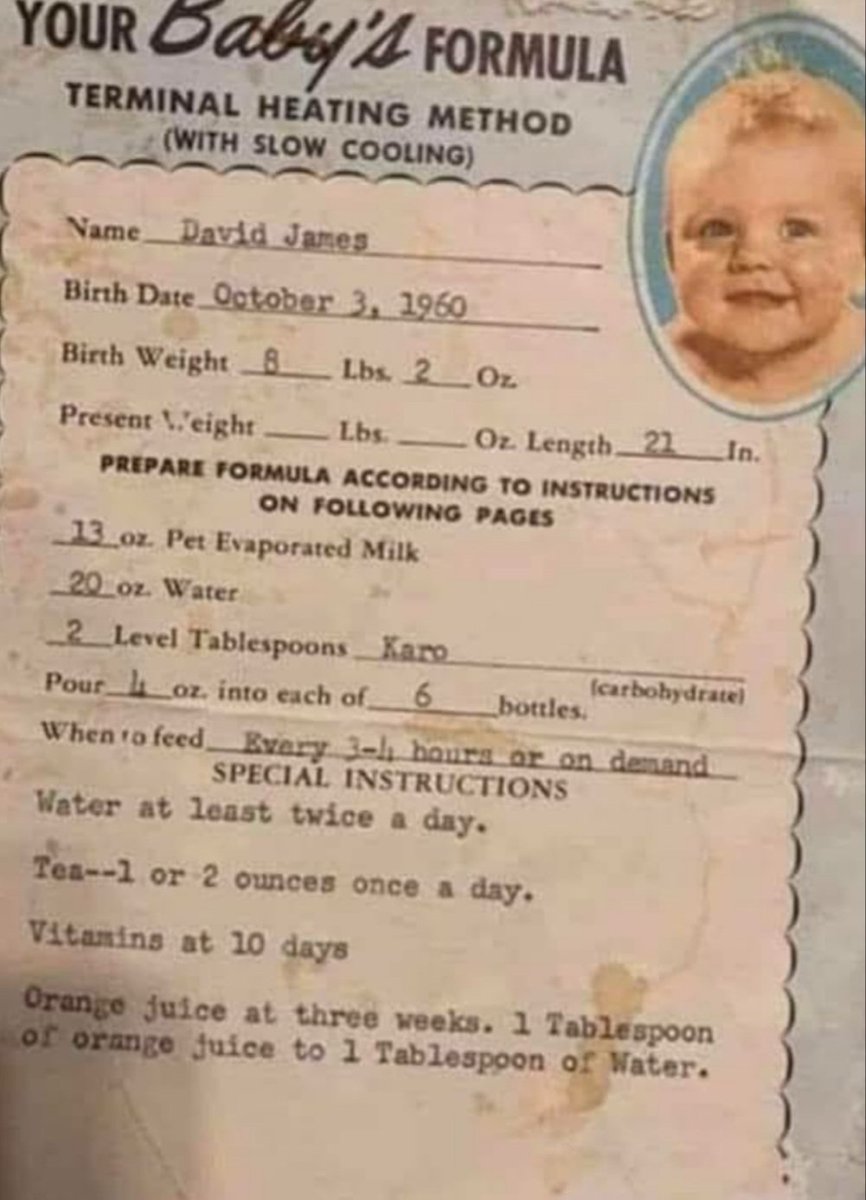

tldr 99%

| Filename: FSsFSYcWYAE85qu.jpg | Source tweet: May 14, 2022 3:34 AM |

Copy image with current annotations Copied to clipboard!

map 92%

| Filename: FTHjqY_XsAEoc6h.jpg | Source tweet: May 19, 2022 11:37 AM |

Copy image with current annotations Copied to clipboard!

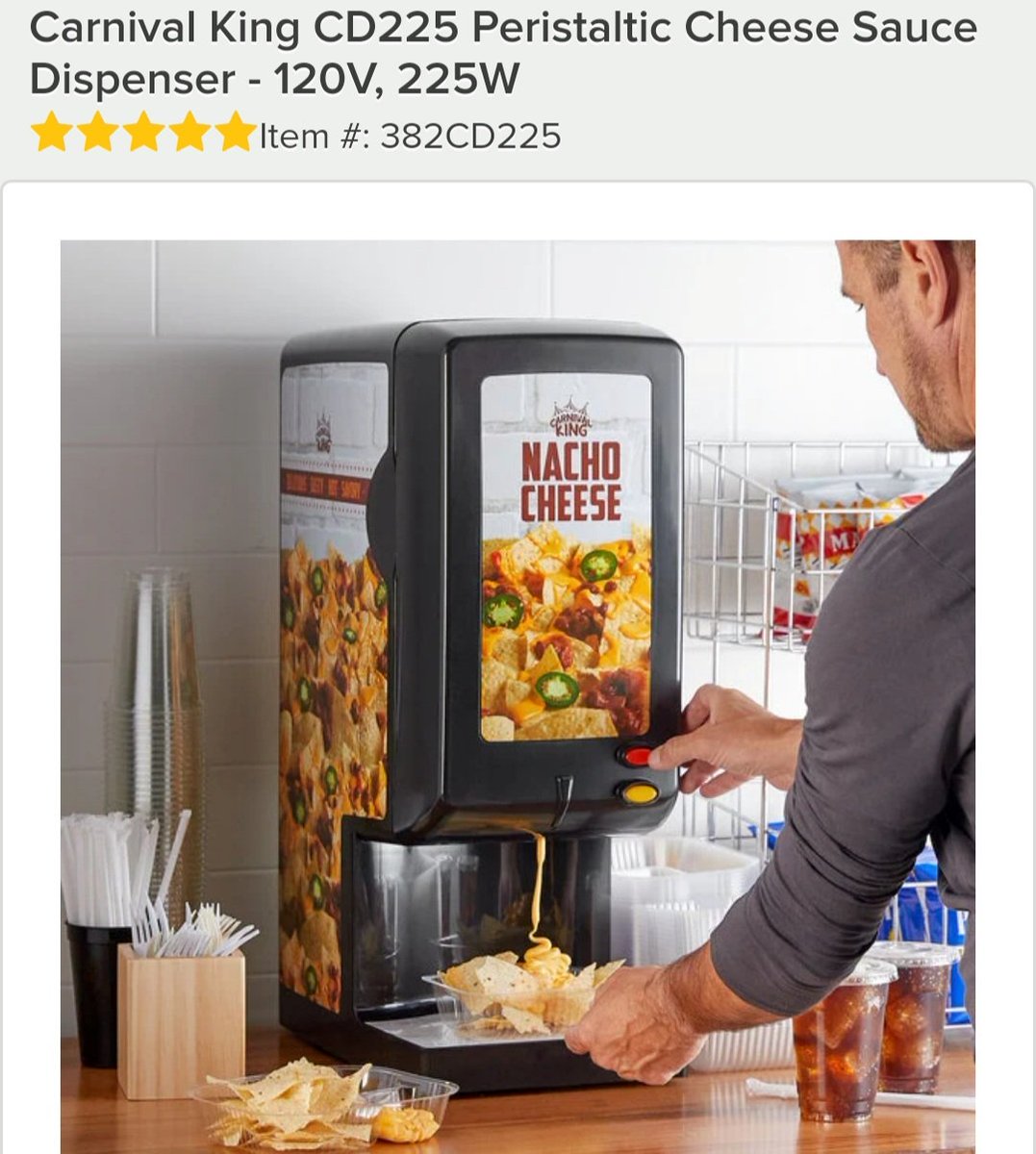

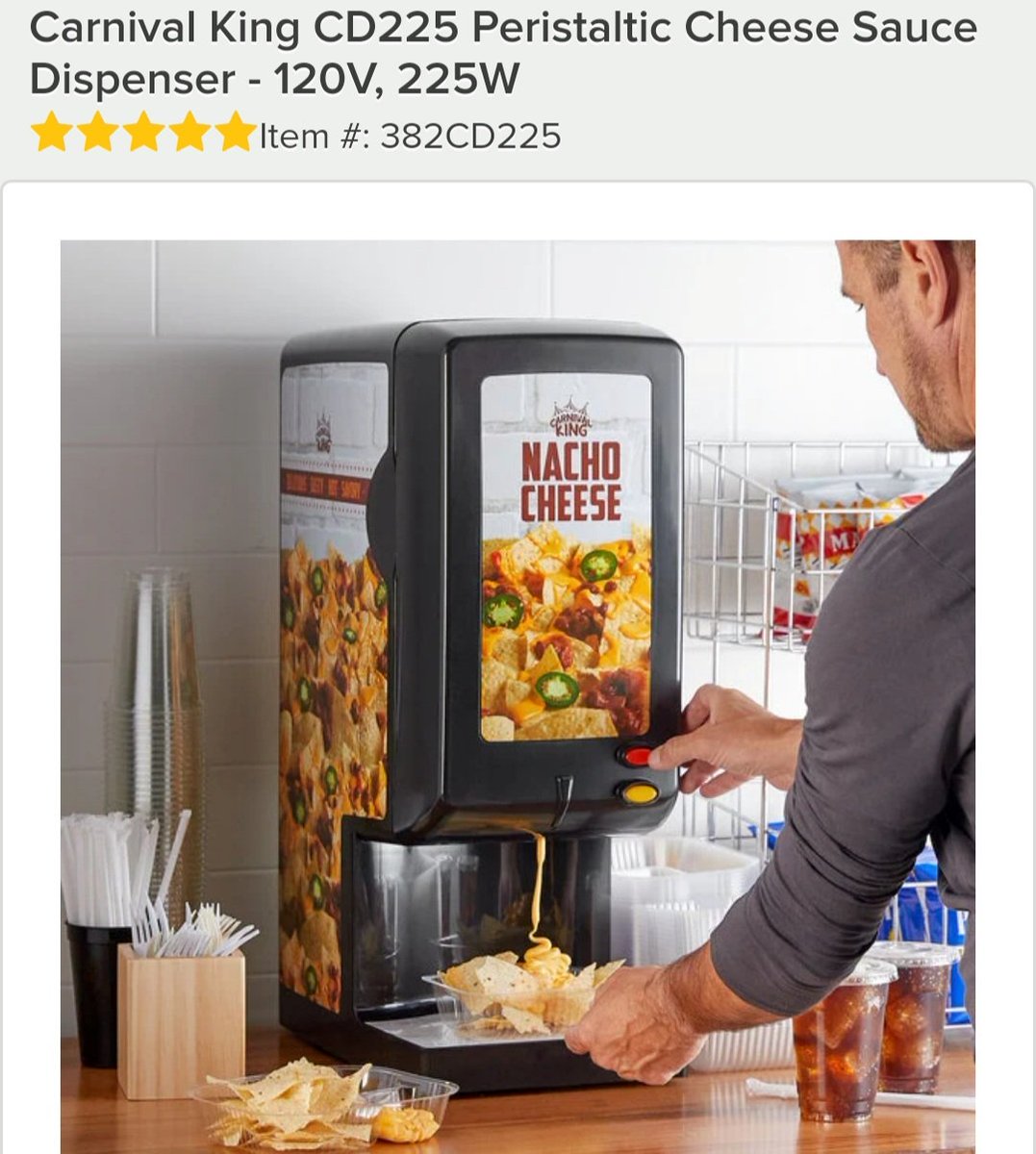

etc 99%

| Filename: FVAWevTXoAAhHPR.jpg | Source tweet: Jun 11, 2022 10:33 PM |

Copy image with current annotations Copied to clipboard!

toons other 88%

| Filename: FVHQQ2LWAAIaG15.jpg | Source tweet: Jun 13, 2022 6:44 AM |

Copy image with current annotations Copied to clipboard!

toons other 55%

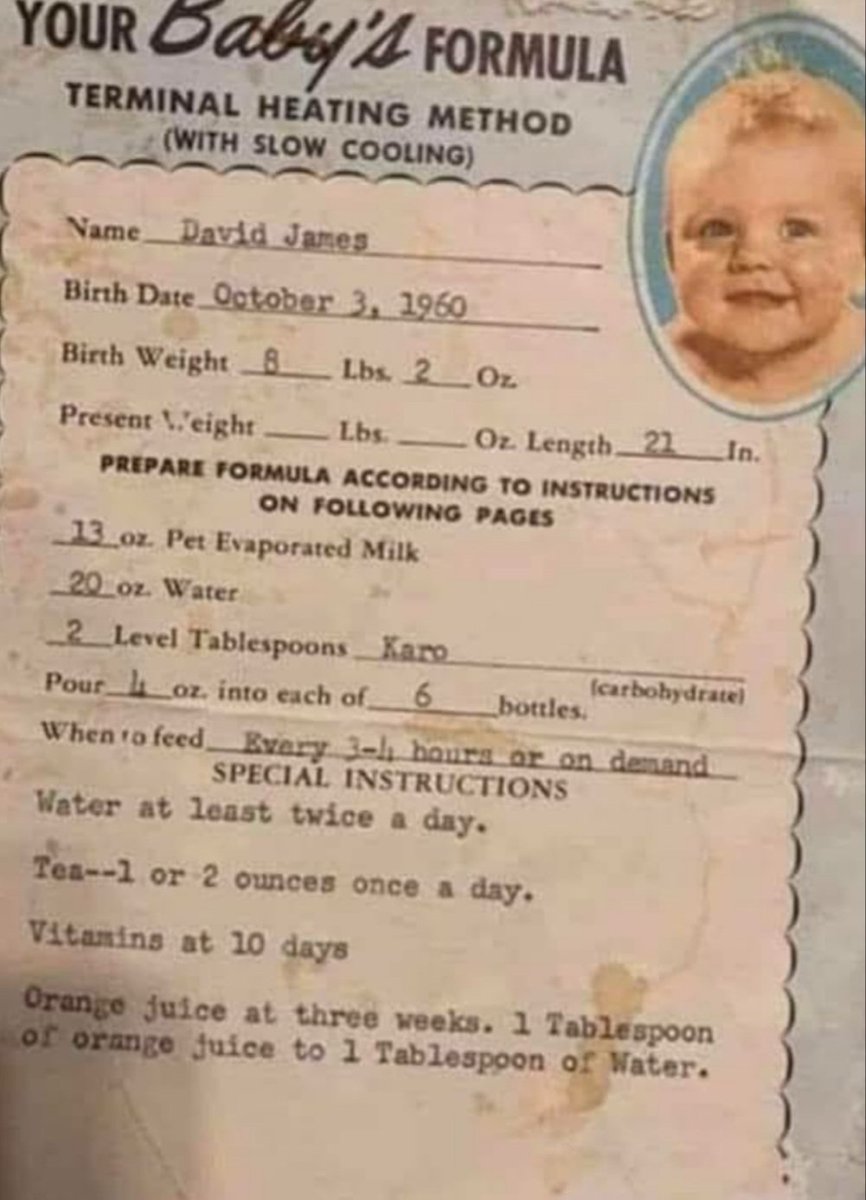

| Filename: FZJnQdLXgAE4R9q.jpg | Source tweet: Aug 2, 2022 10:46 AM |

Copy image with current annotations Copied to clipboard!

tldr 99%