prime98704851's neighborhood:

Copy as image

Copied to clipboard!

Copy as image

Copied to clipboard!

Copy as image

Copied to clipboard!

Copy as image

Copied to clipboard!

Copy as image

Copied to clipboard!

Copy as image

Copied to clipboard!

Filter

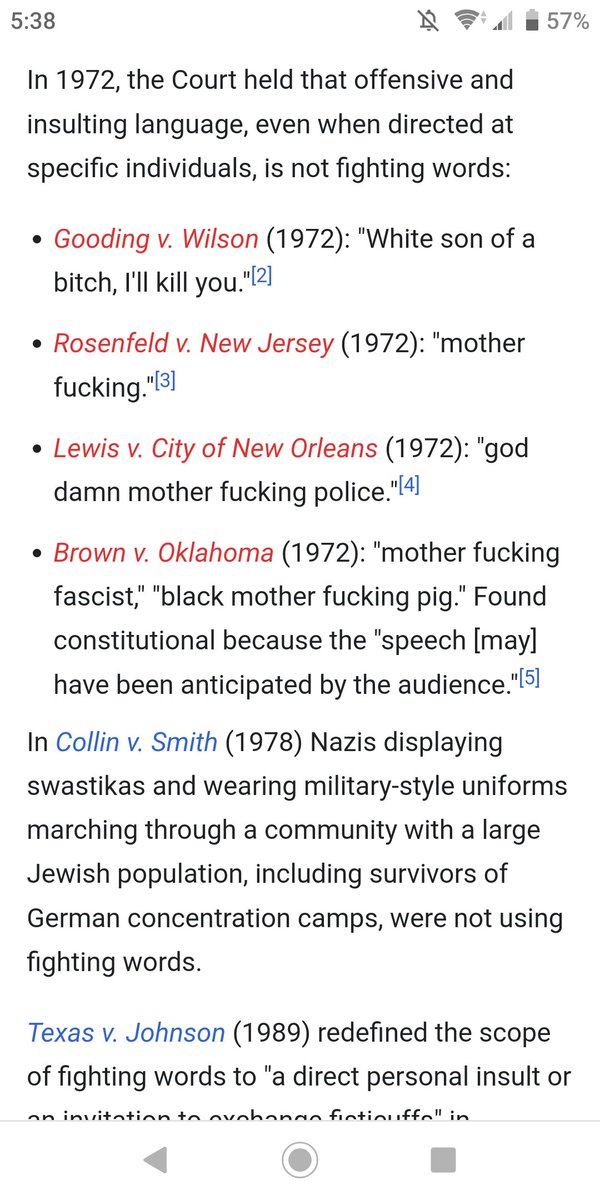

| Filename: FrtmZPragAEhKhE.jpg | Source tweet: Mar 21, 2023 3:11 AM |

Copy image with current annotations Copied to clipboard!

militarized 82%

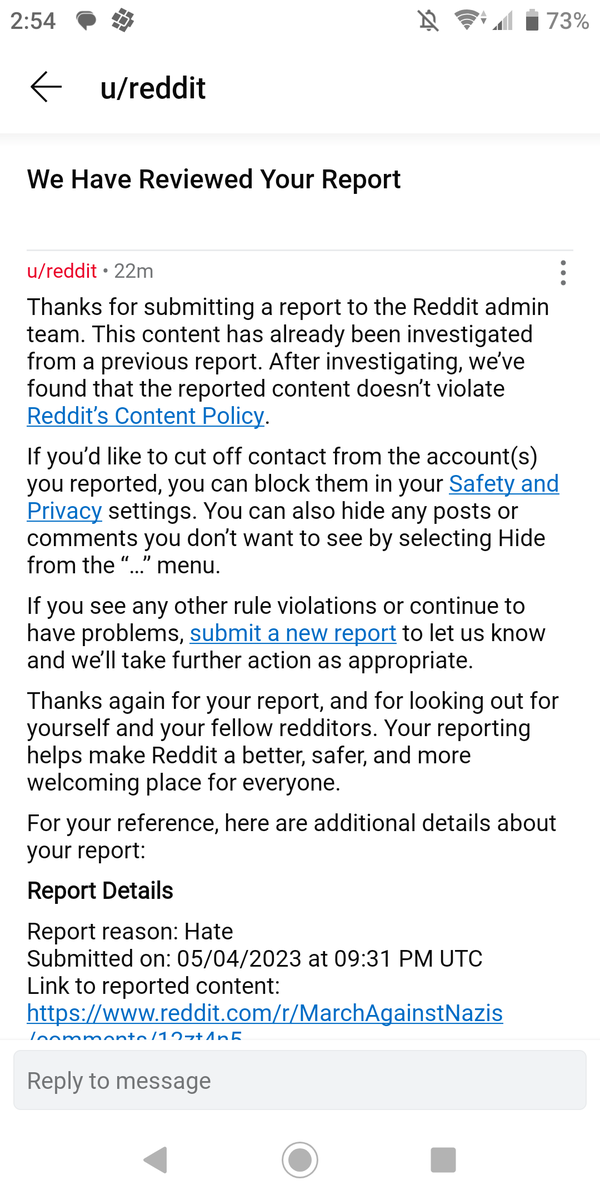

| Filename: Fv_d6YaaEAE5adE.jpg | (previous profile or banner image) |

Copy image with current annotations Copied to clipboard!

skull mask 50%

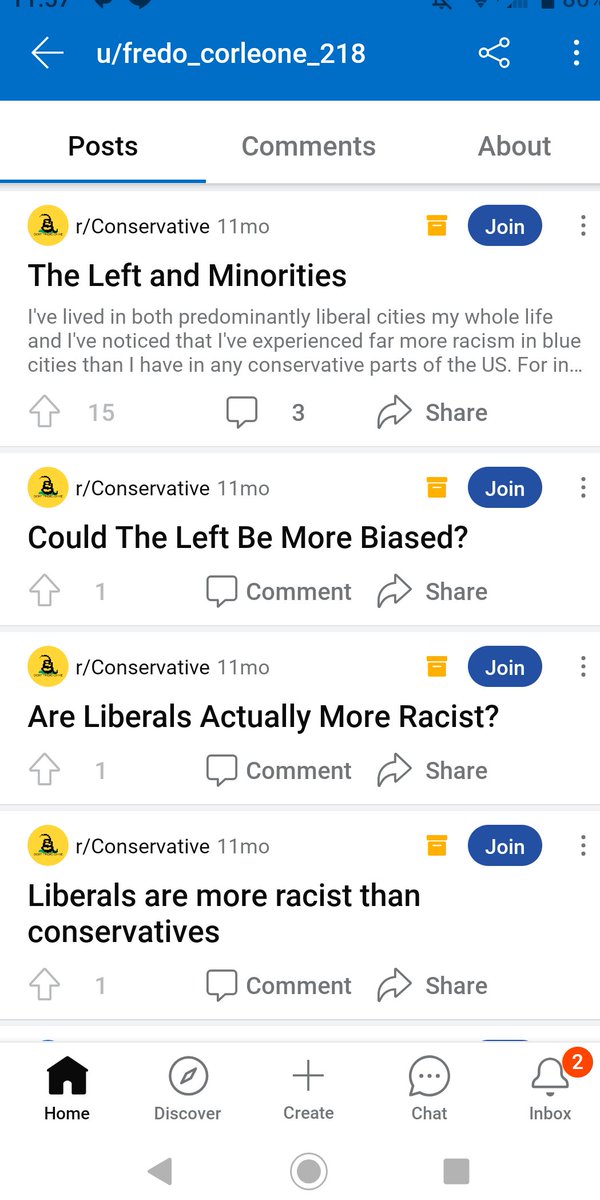

| Filename: RJN8fvIr.jpg | Last seen profile image |

Copy image with current annotations Copied to clipboard!

us flag 98%

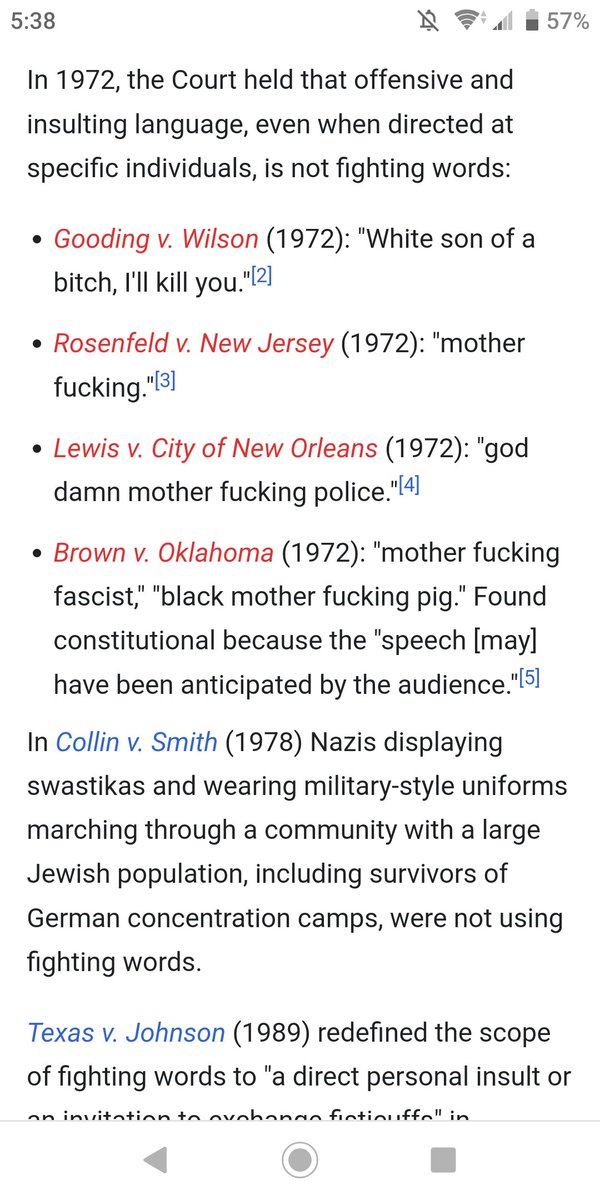

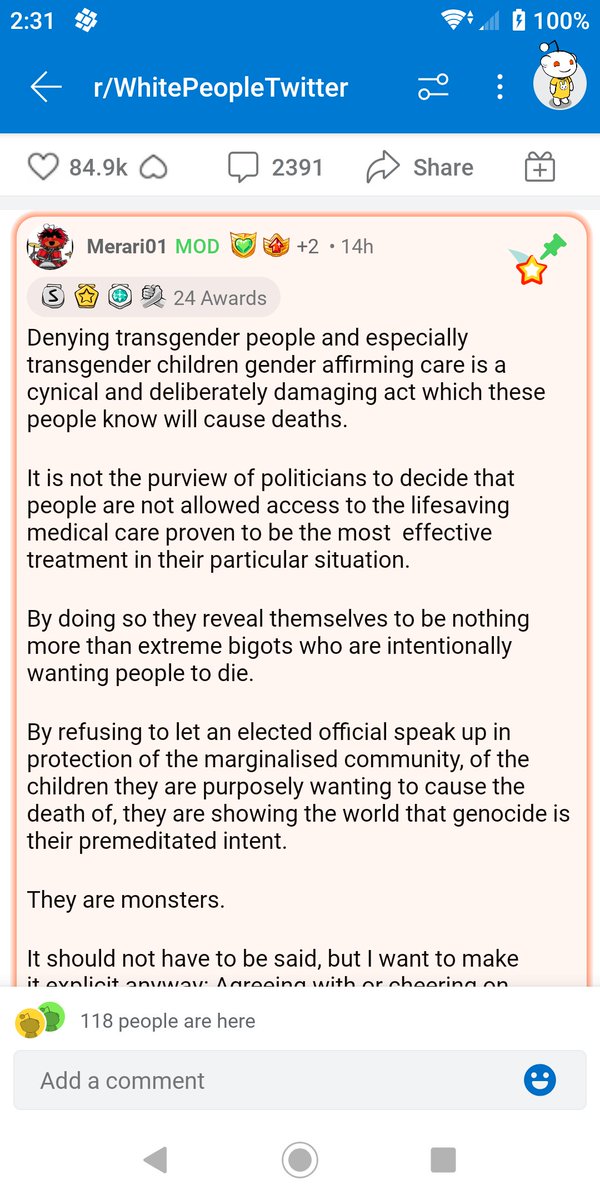

| Filename: FsgjdiLakAAiIZH.jpg | Source tweet: Mar 31, 2023 12:41 AM |

Copy image with current annotations Copied to clipboard!

tldr 99%

| Filename: FrXPUfpaUAE8Syt.jpg | Source tweet: Mar 16, 2023 6:59 PM |

Copy image with current annotations Copied to clipboard!

tldr 98%

| Filename: FvUNXehaQAIROt7.png | (previous profile or banner image) |

Copy image with current annotations Copied to clipboard!

tldr 99%

| Filename: FugFRCzaQAATMry.jpg | (previous profile or banner image) |

Copy image with current annotations Copied to clipboard!

tldr 90%

| Filename: FuRL2naaIAAoinn.jpg | (previous profile or banner image) |

Copy image with current annotations Copied to clipboard!

tldr 99%

| Filename: FuIGfpqagAEg-FZ.jpg | (previous profile or banner image) |

Copy image with current annotations Copied to clipboard!

tldr 98%