Iammannwell's neighborhood:

Copy as image

Copied to clipboard!

Filter

| Filename: Fn7QSvGXEAAhlOD.jpg | (previous profile or banner image) |

Copy image with current annotations Copied to clipboard!

critters 58%

| Filename: FtJOnzIXsAENZLW.jpg | Source tweet: Apr 7, 2023 10:12 PM |

Copy image with current annotations Copied to clipboard!

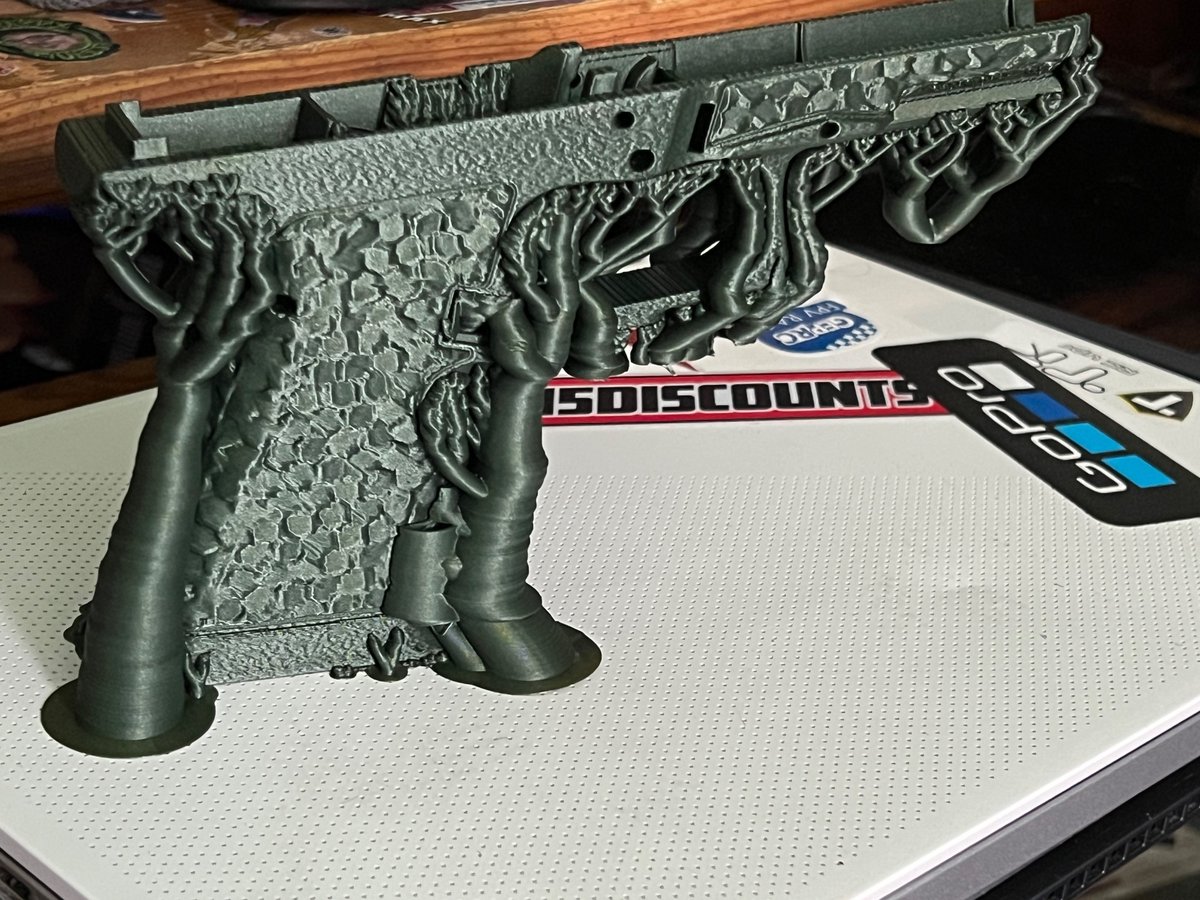

firearm 99%

| Filename: FtRpsUJXoAAANeg.jpg | (previous profile or banner image) |

Copy image with current annotations Copied to clipboard!

firearm 99%

| Filename: FnbA78WWIAE8mGg.jpg | (previous profile or banner image) |

Copy image with current annotations Copied to clipboard!

firearm 99%

| Filename: Fnqr8ZxWIAAUC9_.jpg | (previous profile or banner image) |

Copy image with current annotations Copied to clipboard!

firearm 99%

| Filename: FtRmCESWAAE5ZuW.jpg | (previous profile or banner image) |

Copy image with current annotations Copied to clipboard!

firearm 100%

| Filename: FtR2RutWwAoHBpA.jpg | (previous profile or banner image) |

Copy image with current annotations Copied to clipboard!

firearm 99%

| Filename: FtR2RusX0AAmdTa.jpg | (previous profile or banner image) |

Copy image with current annotations Copied to clipboard!

firearm 99%

| Filename: FtR2RusXsAArt-l.jpg | (previous profile or banner image) |

Copy image with current annotations Copied to clipboard!

firearm 98%

| Filename: ehX9c_Rl.jpg | Last seen profile image |

Copy image with current annotations Copied to clipboard!

art_other 98%

| Filename: 1671398689.jpeg | Last seen banner image |

Copy image with current annotations Copied to clipboard!

etc 46%

| Filename: Fn7tdXXXEAE9jGu.jpg | (previous profile or banner image) |

Copy image with current annotations Copied to clipboard!

art_other 91%